Brave New Camera: Computational Photography

Computational photography is a developing field that combines the elements of traditional photography with additional computer-performed processing. It is pushing the limits of what the word photography means. New processes can overcome the limitations of a camera, encode more information in a single file than was previously possible, or even create new ways of understanding images. In this article you'll learn about the three main emerging fields of computational photography.

Defining Traditional Digital Photography

What we think of as “digital photography” has dominated the field of photography for the past decade. In it, the camera attempts to emulate how a film camera works. This is what I’ll refer to as traditional digital photography.

At every level, traditional digital photography draws from its film predecessor; from the obvious outward signs such as the physical design of the camera—an idea that is only now being challenged by mirrorless interchangeable lens cameras—to the underlying assumptions and concepts of how an image is captured.

In film photography, light enters through a lens and is projected, according to the lens’s specific optics and focus, onto the film. Light sensitive particles on the film react to whatever photons fall on them, capturing the information as closely as their physical properties allow. The camera doesn’t capture what the photographer sees, it captures what the camera sees, although if all goes well these two visions should match relatively closely.

There is always some data lost or corrupted by the photographic process. If the lens is incorrectly focussed, or is a wide-angle lens so introduces distortion, all these optical traits will be captured by the film. A film camera only captures a tiny sample of the available information.

Traditional digital photography simply replaces film with a digital sensor. Light sensitive photo-sites replace light sensitive particles. The camera reacts in the same way, linearly capturing the scene as faithfully as possible. Photons fall on the digital sensor and, subject to the specifics of the lens, the information is captured according to its resolution. There is some processing done with the information from the photo-sites to develop the final image into a photograph that suits our experience of vision, but it is designed to make things as true to what the camera captured as possible. The camera doesn’t try to make the image more than what it saw.

Going Beyond Traditional Digital Photography

While there are obvious historic, technological and artistic reasons that traditional digital photography mimics film photography, over the past few years things have started to change. The assumption that a camera should capture a scene as closely as possible has begun to be challenged. Instead, photographic methods that use the captured image—or images—as the starting point from which the final image is processed or created have been developed.

Ramesh Raskar and Jack Tumblin, researchers in computational photography at the Massachusetts Institute of Technology, have identified three phases for the development of the field: epsilon photography, which develops processes by which cameras can overcome their inherent limitations, coded photography, where the image captured is alterable after the fact, and essence photography, where the very concept of what makes an image is challenged.

Epsilon Photography

Unlike coded or essence photography, images captured using epsilon photography techniques often appear, on the surface, to just be regular digital photographs. Multiple shots are taken and one or more traditional camera parameters—focus, focal length, shutter speed, aperture, ISO, and the like—are changed by a small amount between each shot. The shots are then algorithmically combined into a single image in such a way that it extends the capabilities of the camera.

Take, for example, High Dynamic Range (HDR) photography, which is the most popular form of epsilon photography. Multiple shots are captured of a single scene, each with a different exposure value. By varying the exposure, the dynamic range of the series of images far exceeds that of the camera. The under-exposed shots have more highlight detail and the over-exposed shots more shadow detail than the camera could capture in a single frame.

When the shots are combined, the final image reflects the dynamic range of the series, not the camera. Other than that, it appears as a regular digital photo.

HDR isn’t the only epsilon photography technique. Any other parameter can be varied to create “impossible images” that have, for example, greater depth of field than any camera could feasibly record.

Busted! 7 Myths About High Dynamic Range Photography

Busted! 7 Myths About High Dynamic Range Photography

Ben Lucas29 Mar 2015

Ben Lucas29 Mar 2015

Focus Stacking for Extended Depth of Field

Focus Stacking for Extended Depth of Field

Marie Gardiner20 Aug 2014

Marie Gardiner20 Aug 2014

Coded Photography

While epsilon photography aims to extend how the camera is able to represent a scene, coded photography tries to encode as much of the information available into a single photograph. It is with coded photography that the traditional language of photography begins to become inadequate. A coded photograph contains far more information than can be represented with a single image, instead, it can be decoded to recreate the scene in different ways. In other words, the one photograph contains more than one possible image.

Light-field photography is one area of coded photography that has been explored commercially in the last few years. A light-field camera, such as one from Lytro, captures the scene from multiple points of view, each with different planes of focus using an array of small lenses. The information from the resulting captures is encoded into a single photograph file.

In post-production, the information from the file can be decoded into any number of possible images. As a significant sample of all possible planes of focus are recorded, the apparent focal point of the image can be changed. Similarly, with more advanced processing, because the relative position of every object in the photograph can be triangulated, the foreground, mid-ground and background can be be intelligently pulled from different shots. The subject of the photo can appear to have been captured at f/16 while the background is reduced to the kind of bokeh you’d get if they’d been shot at f/1.8.

Another type of coded photography uses multiple exposures, either of a subject at known intervals or with multiple cameras, to compare or combine the images and interpolate new information. For example, with a moving subject and a static camera, the relative speed of the subject in the images can then be calculated and all motion blur removed from the resulting photograph. With a static subject and a moving camera (or multiple synchronized cameras), a three-dimensional scan of the subject can be computed. This is how your favourite sports hero's head makes it onto a digital body in video games. The Microsoft Kinect camera and Google Maps are two high profile coded photography projects.

Coded photography techniques have a funny habit of bending, if not breaking, the idea of the frame. Since the beginning of photography, photographs have been bounded either by the fall-off of the edge of the lens's image circle or by an arbitrary frame. This is not how our eyes see, but we've learned to understand photographic images as static, immutable images with firm borders. Coded photography is eroding this assumption.

Essence Photography

While epsilon and coded photography attempt to record the scene in a manner that is consistent with how the human eye sees things, essence photography ignores the idea of emulating biological vision. Instead, essence photography plays with different ways that the information in a scene can be understood and represented.

Google’s Deep Dream project, which took the Internet by storm earlier this year, is an entertaining example of essence photography. Deep Dream works by taking a base image and analysing it's features for target patterns. The sections of the image that most closely match the targets are transformed to more closely resemble them. The resulting image is run through the process again and again to get the final version.

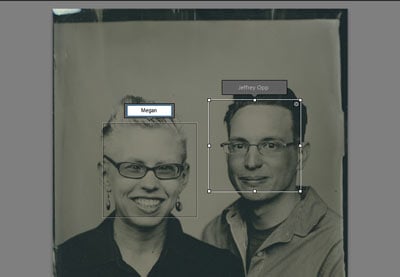

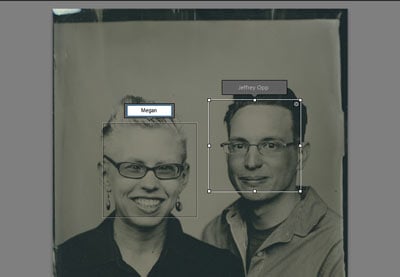

Some less fantastical essence photography processes might already be at your fingertips. This kind

of algorithm powers the facial recognition features in Adobe Lightroom, on Facebook,

and many other places.

Over the next few years there will be more, and more profound, developments in essence photography. Computers will get better at pulling and transforming information from base shots. More than any other area of computational photography, essence photography has the potential to transform the way we talk about photographs, and to allow for new ways of artistic expression.

Welcome to the World of Machine Vision

Welcome to the World of Machine Vision

Jeffrey Opp25 Aug 2015

Jeffrey Opp25 Aug 2015

Meet Adobe Lightroom CC: Faster, Smarter, and Way More Social

Meet Adobe Lightroom CC: Faster, Smarter, and Way More Social

Andrew Childress22 Apr 2015

Andrew Childress22 Apr 2015

Brave New Camera

Computational photography is a developing field that is challenging the very definition of photography. Traditional digital photography is concerned with ever more faithful ways of recreating film photography with a digital sensor instead of silver halide. The field of computational photography is creating new ways of image-making that are machine-readable representations of our experience. That is a big shift.

Techniques in computational photography range from simply combining multiple images that overcome a camera’s limitations to developing novel methods for analysing and processing images. In this article I’ve briefly touched on a few of the many interesting areas of the field. While some of these new technologies may seem a bit gimmicky today, the advances they present are the first real changes in photography in a very long time, maybe since the invention of the portable 35mm film camera.

Will traditional digital photography disappear? Unlikely. People still enjoy taking photographs with film, even! They like the creative, technological and social experience of photographing with traditional cameras. Traditional digital photography shows no sign of slowing down.

However, these new technologies have the potential to change the way we think about photography at it's foundations. They will alter the way we define cameras and images. In some ways, computational photography already has. HDR, though much maligned by traditionalists, is popular because it can capture vastly more information than a simple single exposure can. Google Maps' street view has altered the way we think about and navigate the world. We don't yet know just how much computational photography will change the world.

One thing, though, is clear: digital photography has arrived. It's not just pixels in place of silver; it is something entirely new.